The Concept

The concept of a matrioshka brain comes from the idea of using Dyson spheres to power an enormous, star-sized computer. The term “matrioshka brain” originates from matryoshka dolls, which are wooden Russian nesting dolls. Matrioshka brains are composed of several Dyson spheres nested inside one another, the same way that matryoshka dolls are composed of multiple nested doll components.

The innermost Dyson sphere of the matrioshka brain would draw energy directly from the star it surrounds and give off large amounts of waste heat while computing at a high temperature. The next surrounding Dyson sphere would absorb this waste heat and use it for its computational purposes, all while giving off waste heat of its own. This heat would be absorbed by the next sphere, and so on, with each sphere radiating at a lower temperature than the one before it. For this reason, Matrioshka brains with more nested Dyson spheres would tend to be more efficient, as they would waste less heat energy. The inner shells could run at nearly the same temperature as the star itself, while the outer ones would be close to the temperature of interstellar space. The engineering requirements and resources needed for this would be enormous.

What would it even do?

Imagine. We have exponentially more abstract concepts and emotions available to us than chimps, and we don’t really have that much more brainmatter. If just a relatively slight increase brought us from thinking about survival to thinking about infinity and divinity, then a matrioshka brain would think of absolutely unfathomable things and could bring it all to life in simulation.

Why would we need such Computing power?

If you’ve seen the Transhumanism or the Simulation Hypothesis videos on You-Tube though, you can probably guess at why you might.

Storing Trillions of trillions of uploaded digital human minds, whole brain emulations, or simulating whole universes, is one of the reasons why you might build computers like this. And of course a third is that it might be one single immense brain, as the second half

of the name implies. Even as huge as such brains are, potentially having processing power in excess of 10 to the 49 Hertz,

trillions of trillions of trillions of times faster than our best modern computers, there’s still a lot of things that would take even a computer like this eons to make a dent in.

Why are these ‘nested’ layers

If you’ve seen the Transhumanism or the Simulation Hypothesis videos. you can probably guess at why you might. Storing Trillions of trillions of uploaded digital human minds, whole brain emulations, or simulating whole universes, is one of the reasons why you might build computers like this. And of course a third is that it might be one single immense brain, as the second half And of course a third is that it might be one single immense brain, as the second half of the name implies. Even as huge as such brains are, potentially having processing power in excess of 10 to the 49 Hertz, trillions of trillions of trillions of times faster than our best modern computers, there’s still a lot of things that would take even a computer like this eons to make a dent in.

A Matrioshka brain is a mega-structure in space based on the Dyson sphere, of immense computational capacity.

Mega-scale engineering is the construction of structures on a scale of at least 1000 km {621.371 mi.} in length. It ranges in size from an asteroid to an entire Star. It is an example of a Class B stellar engine, employing the entire energy output of a star to drive computer systems. The concept of a Matrioshka brain comes from the idea of using Dyson spheres to power an enormous, star-sized computer.

A Dyson sphere is a hypothetical mega-structure that surrounds a star to collect its energy.

The concept was first proposed in 1960 by physicist and astronomer Freeman Dyson.

The term originates from matryoshka dolls, which are wooden Russian nesting dolls. Matrioshka brains are composed of several Dyson spheres nested inside one another, the same way that matryoshka dolls are composed of multiple nested doll components.

The innermost Dyson sphere of the Matrioshka brain would draw energy directly from the star it surrounds and give off large amounts of waste heat while computing at a high temperature. The next surrounding Dyson sphere would absorb this waste heat and use it for its computational purposes, all while giving off waste heat of its own. This heat would be absorbed by the next sphere, and so on,

with each sphere giving off less heat than the one before it. For this reason, Matrioshka brains with more nested Dyson spheres would tend to be more efficient, as they would waste less heat energy. The inner shells could run at nearly the same temperature as the star itself,

while the outer ones would be close to the temperature of interstellar space.

A brief look at Computational Power:

1.12×1036 FLOPS is its estimated computational power, assuming 1.87×1026 Watt power (possibly solar) and 6 GFLOPS/Watt efficiency.

This is for Type I prototypes.

A nanomechanical implementation of the computronium (which would allow us to push close to the Landauer limit of energy efficiency)

could generate some 1047 FLOPS. This is for Type II.

Alternatively, 4×1048 FLOPS is its estimated computational power where the power source is the Sun,

with the ideal thermodynamic efficiency of a Carnot engine. This is for Type II.

5×1058 FLOPS is the estimated power of a galaxy used by Matrioshka brains. This is for Type III.

A UV brain is capable of 1090 operations per second.

If all matter in the universe was turned into a black hole it would have a lifetime of 2.8 × 10139 seconds.

During that lifetime such a B brain would perform 2.8 × 10229 operations.

If there are 10500 kinds of universes in a multiverse, then a quantum computronium MV brain could manage around 10600 operations per second.

*UV brain: Universe brain. A hypothetical entity capable of creating whole worlds.

It’s theorized that our universe is just the creation of a U brain.

*MV brain: Multiverse brain. A hypothetical entity capable of computing in Fourth Dimension.

It can create and delete universes.

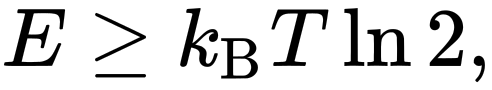

Landauer’s principle states that the minimum energy needed to erase one bit of information is proportional to the temperature at which the system is operating. Specifically, the energy needed for this computational task is given by

where kB is the Boltzmann constant and

T is the temperature in Kelvin. At room temperature,

the Landauer limit represents an energy of approximately 0.018 eV (2.9×10−21 J). As of 2012, modern computers use about a billion times as much energy per operation.

These are computing scales. Computation is measured in floating point operations per second (FLOPS).

Data is in bits where 8 bits = 1 byte.

Computing scales “The Table”